Types of Neural Networks and When to Use Which Type

This article is inspired by my following YouTube video, where I explain different types of neural networks.

In the rapidly evolving field of artificial intelligence, neural networks stand out as one of the most powerful tools for solving a wide range of complex problems.

From image and speech recognition to natural language processing and beyond, neural networks have demonstrated remarkable capabilities.

However, not all neural networks are created equal. There are various types, each suited for different tasks. Understanding these types and when to use them is crucial for any data scientist or artificial intelligence practitioner.

In this article, we discuss some major types of neural networks. We do not discuss the math here, but we outline when to use which type of neural network.

Contents

Feedforward Neural Networks (FNNs)

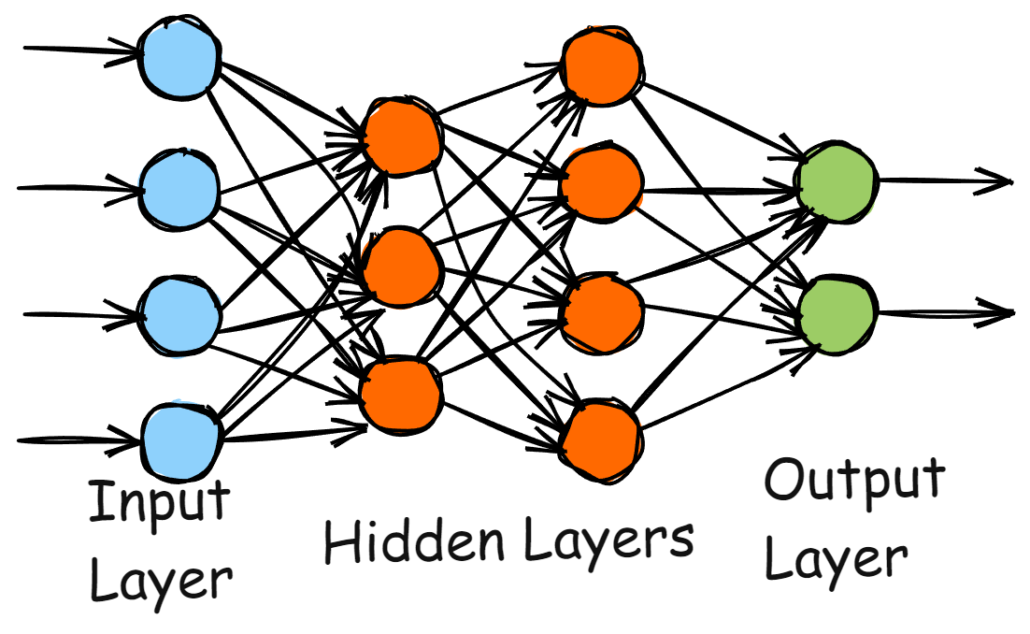

Feedforward Neural Networks (FNNs) are the simplest type of artificial neural network. They are also known as multi-layer perceptrons (MLPs).

In FNNs, information moves in one direction—from the input layer through the hidden layers to the output layer. The network does not cycle or loop.

Figure: A Feedforward Neural Network (FNN)

Figure: A Feedforward Neural Network (FNN)

Use Cases of FNNs

Classification and regression problems are popular use cases of FNNs.

Feedforward neural networks (FNNs) are commonly utilized for straightforward classification tasks in which the connections between input features and the target variable are not overly complex. In a classification problem, the objective is to predict a class label based on given features, resulting in a discrete output from the network. FNNs are also employed in regression problems, where the aim is to predict a continuous output.

When to use FNNs

FNNs are suitable for situations where the data is structured, and the relationships between features are relatively straightforward.

If you are new to machine learning and want to dive deeper over time, FNNs are a great starting point because they are essential to all other types of neural networks.

Convolutional Neural Networks (CNNs)

Convolutional Neural Networks, also known as CNNs, are a type of deep learning algorithm used to process and analyze visual data, such as images. These networks are built with specialized layers, including convolutional layers to detect features, pooling layers to reduce dimensionality, and fully connected layers to make sense of the detected features. CNNs are designed to extract intricate spatial hierarchies within the data, allowing them to recognize patterns and objects within images with remarkable accuracy.

Figure: Typical CNN. Figure attribution: Aphex34, CC BY-SA 4.0, via Wikimedia Commons.

Use Cases of CNNs

When it comes to computer vision tasks, CNNs play a crucial role in various applications, such as image recognition, object detection, and video analysis, highlighting their versatility and wide-ranging capabilities within the field of computer vision.

- Image Recognition: CNNs are the go-to architecture for image recognition and image classification tasks.

- Object Detection: CNNs are utilized for identifying and locating objects within images in object detection tasks.

- Video Analysis: CNNs can be adapted to analyze video data by processing frames either sequentially or in parallel.

When to Use CNNs

CNNs should be used when dealing with image data or any spatially structured data. Their ability to capture spatial hierarchies makes them highly effective for visual tasks.

Recurrent Neural Networks (RNNs)

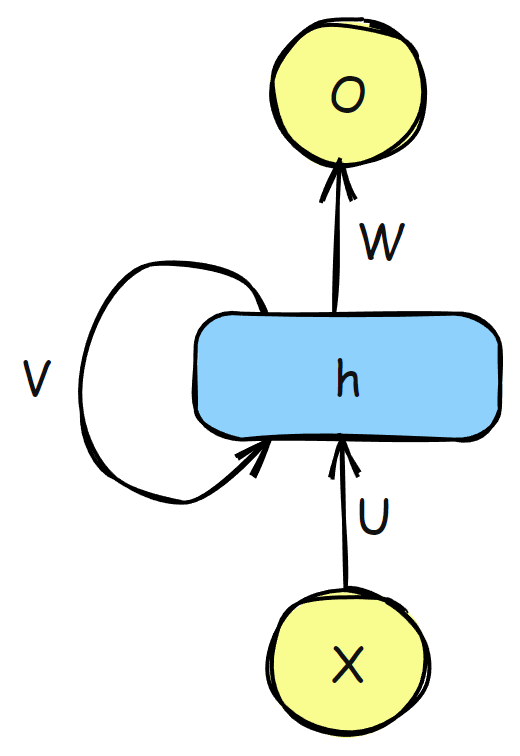

Recurrent Neural Networks are designed for sequential data. Unlike feedforward networks, RNNs have connections that form directed cycles, allowing them to maintain a memory of previous inputs. This makes them ideal for tasks where context or time sequence is important.

Figure: Recurrent Neural Network (RNN)

Figure: Recurrent Neural Network (RNN)

Use Cases of RNNs

In today’s technological landscape, Recurrent Neural Networks (RNNs) have proven to be incredibly versatile and effective in a variety of applications. From natural language processing tasks such as language modeling, text generation, and machine translation to speech recognition and time series prediction, RNNs offer powerful solutions thanks to their ability to model temporal dependencies.

- Natural Language Processing (NLP): RNNs are widely used in NLP tasks such as language modeling, text generation, and machine translation.

- Speech Recognition: RNNs are effective in processing audio data for speech recognition.

- Time Series Prediction: RNNs can model temporal dependencies, making them suitable for time series forecasting.

When to Use RNNs

RNNs are used when working with sequential data, such as text and time series data, where the order of the data points is crucial. Their capability to remember previous inputs makes them well-suited for tasks involving time series or text.

Long Short-Term Memory Networks (LSTMs) and Gated Recurrent Units (GRUs)

LSTM (Long Short-Term Memory) and GRU (Gated Recurrent Unit) are advanced types of RNNs specifically created to overcome the vanishing gradient problem commonly encountered by traditional RNNs. These specialized networks utilize gates to regulate the flow of information, enabling more effective long-term learning and retention of information.

Figure: LSTM. Figure attribution: Guillaume Chevalier, CC BY-SA 4.0, via Wikimedia Commons

Figure: GRU. Figure attribution: Jeblad, CC BY-SA 4.0, via Wikimedia Commons

Use Cases of LSTMs and GRUs

When it comes to the use cases of LSTMs and GRUs, these advanced types of RNNs are particularly useful for tasks requiring the modeling of long-term dependencies in the data. Some examples are as follows:

- Language Translation: They are effective in translation tasks where understanding the context over long sequences of text is important.

- Stock Market Prediction: LSTMs and GRUs can capture long-range temporal dependencies in financial time series data, making them suitable for stock market prediction.

When to Use LSTMs and GRUs

Opt for LSTMs or GRUs when your sequential data has long-term dependencies that standard RNNs cannot effectively capture. They provide better performance for tasks requiring remembering information over extended sequences.

Transformer Networks

Transformers represent a neural network architecture that utilizes self-attention mechanisms for processing input data. They have emerged as the cornerstone for numerous cutting-edge models in natural language processing. Transformers have significantly transformed the handling of sequential data by addressing certain constraints of RNNs and LSTMs.

Figure: Transformer. Yuening Jia, CC BY-SA 3.0, via Wikimedia Commons

Use Cases of Transformer Networks

In the ever-expanding landscape of artificial intelligence, transformers have emerged as a revolutionary force, driving transformative advancements across a multitude of domains.

- Machine Translation: Transformers are highly effective in translating text from one language to another.

- Text Summarization: They can generate concise summaries of long documents.

- Question Answering: Transformers enrich models that can answer questions based on text passages.

- Speech Recognition: They are used in processing sequential audio data for tasks like speech-to-text conversion.

- Time Series Analysis: Transformers can be applied to time series forecasting by capturing dependencies across different time steps.

- Bioinformatics: They are used for protein structure prediction and other sequence-based tasks in biology.

When to Use Transformers

Transformers are particularly well-suited for natural language processing tasks where it is essential to capture the context and dependencies within text. They perform exceptionally well in tasks that demand a deep understanding of the subtleties and structural intricacies of language.

Generative Adversarial Networks (GANs)

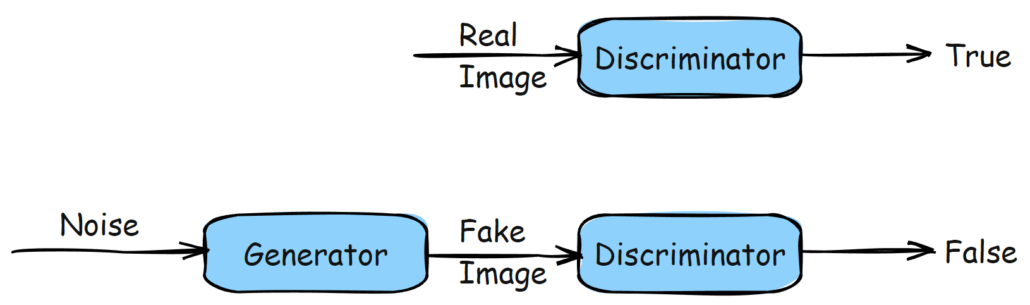

Generative Adversarial Networks (GANs) are a type of machine learning framework that consists of two neural networks: the generator and the discriminator. The generator is responsible for creating synthetic data, while the discriminator’s role is to differentiate between the real and generated data. These two networks are trained simultaneously in a competitive manner, with the generator aiming to generate data that is indistinguishable from real data, and the discriminator striving to become increasingly proficient at identifying the generated data. As a result, GANs are known for their ability to produce realistic synthetic data across various domains, including images, videos, and text.

Figure: GAN.

Figure: GAN.

Use Cases of GANs

GANs have found prominent use in various domains due to their ability to produce realistic data and creative outputs. Some of the key use cases of GANs are as follows.

- Image Generation: GANs are famous for generating realistic images, such as creating high-resolution photos from low-resolution inputs.

- Data Augmentation: They can generate synthetic data to augment training datasets.

- Creative Applications: GANs are used in artistic applications like generating artwork or music.

When to Use GANs

GANs are particularly useful for creative tasks and data augmentation in cases where obtaining real data is challenging. They are a great choice when you need to generate new, realistic data samples.

Autoencoders

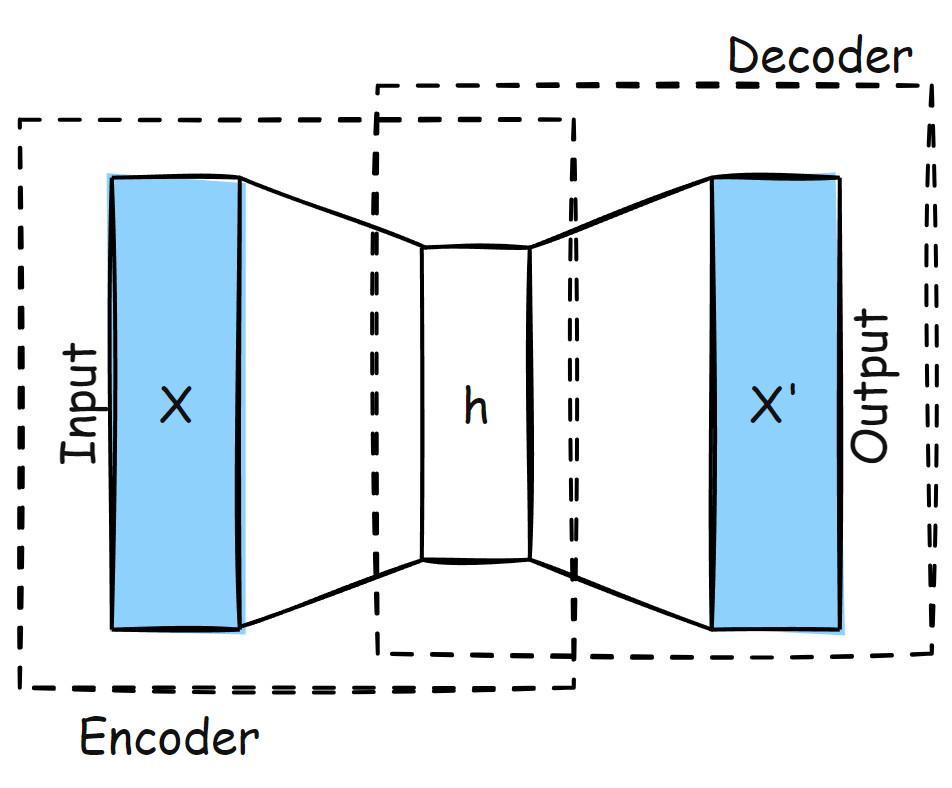

Autoencoders are a type of neural network that falls under the unsupervised learning category. They are specifically designed to learn the most efficient ways to encode input data. Autoencoders have two main parts: an encoder, which compresses the input data into a lower-dimensional representation, and a decoder, which reconstructs the original input data from the compressed representation.

Figure: Autoencoder.

Figure: Autoencoder.

Use Cases of Autoencoders

Autoencoders have various practical applications across different domains. Below are some of the key use cases.

- Dimensionality Reduction: Autoencoders can reduce the dimensionality of data, making it easier to visualize or process.

- Anomaly Detection: By learning to reconstruct normal data, autoencoders can identify anomalies by their reconstruction errors.

- Data Denoising: They can remove noise from data, such as images or signals.

When to Use Autoencoders

Autoencoders are useful when compressing data, detecting anomalies, or denoising data. They are also effective for unsupervised learning tasks where labeled data is not available.

Conclusions

There are many other types of neural networks, including diffusion models, variational autoencoders, and different variations of GANs. Choosing the right type of neural network for your task is essential for achieving optimal performance. Feedforward neural networks are great for simple tasks, while CNNs and RNNs are suited for more complex data structures like images and sequences. Advanced architectures like LSTMs, GRUs, GANs, autoencoders, and transformers offer powerful solutions for specialized problems.

Understanding the strengths and limitations of each type of neural network will help you select the best model for your specific use case, ultimately leading to more effective and efficient AI solutions.

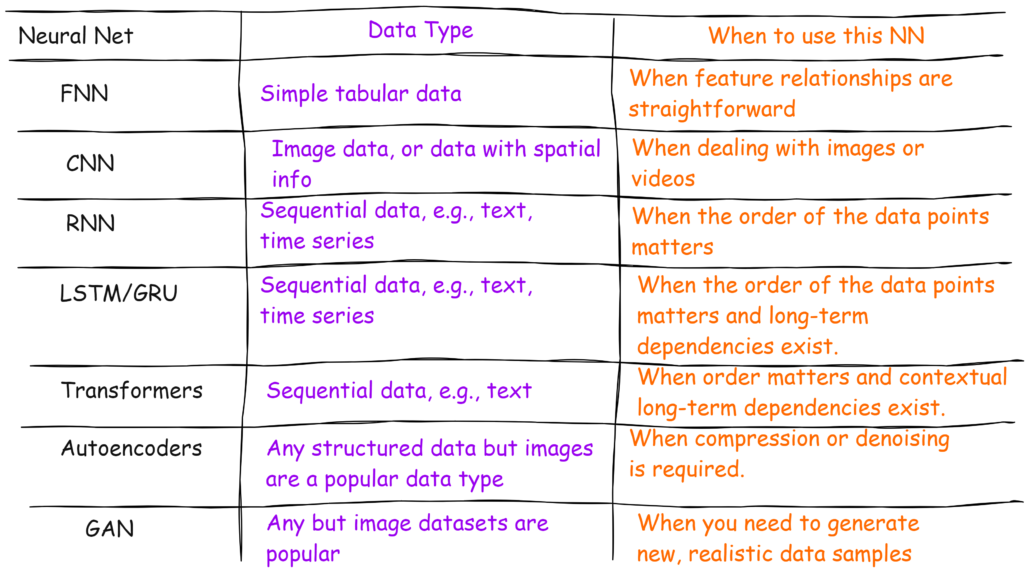

Table: All the neural networks, the data types, and when to use which network.